LearnPath – Agentic Workflow Orchestrator & Reference Architecture

A production-ready blueprint for deterministic AI agents: Planner-Executor patterns, structured outputs, and polyglot microservices.

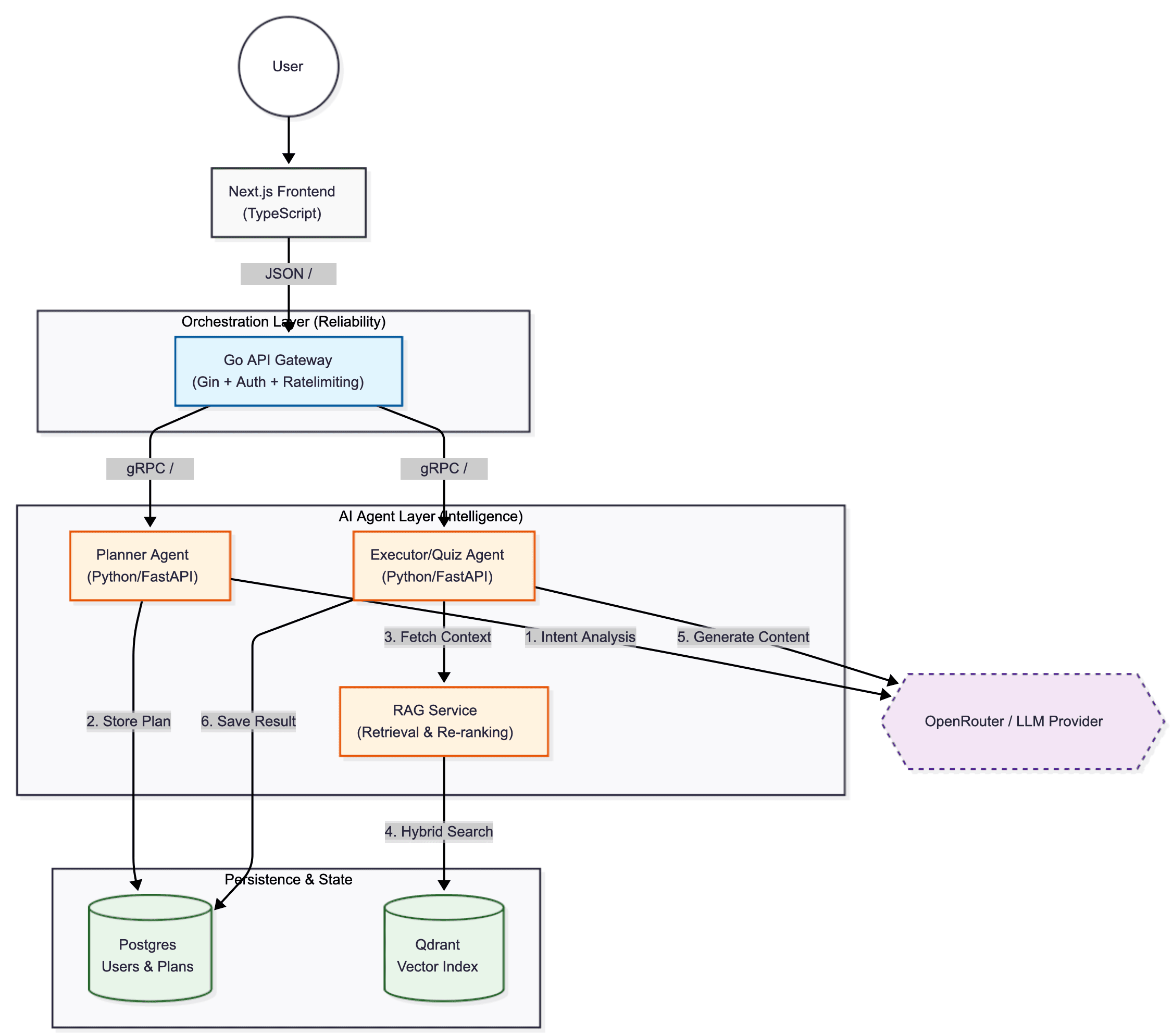

LearnPath is not just a learning tool—it is a reference architecture for building reliable, scalable AI agents in a distributed system.

It demonstrates how to solve the "black box" problem of LLMs by using the Planner-Executor pattern to decompose high-level goals into verifiable steps, and enforcing Structured Outputs (JSON schemas) to ensure the UI never breaks.

The system is engineered as a polyglot microservices stack: a Go Gateway handles orchestration and high-concurrency traffic, while isolated Python services handle the probabilistic AI logic (planning, RAG, quiz generation).

Tech Stack & Role

Stack: Go (Gin/Orchestrator), Python (FastAPI/AI Agents), Next.js (TypeScript), Qdrant (Vector Search), Postgres, Docker, OpenTelemetry.

I architected the system to bridge the gap between "Python AI prototypes" and "Go production systems." My focus was on reliability patterns: idempotent orchestration, structured schema validation, and hybrid retrieval pipelines.

Architectural Patterns Implemented

The system follows a strict separation of concerns, using Go for the reliability layer and Python for the intelligence layer.

Planner-Executor Agent Pattern: Instead of a single "do it all" prompt, the system uses a dedicated Planner Agent to propose a curriculum, which is then validated and fleshed out by specific Executor Agents. This ensures long-horizon tasks remain coherent.

Structured Outputs & Determinism: To prevent UI crashes and "hallucinated" data formats, all AI services enforce strict JSON schemas. The Go gateway validates every response before it reaches the frontend, guaranteeing type safety.

Advanced RAG & Hybrid Search: A Qdrant-based pipeline that combines dense vector search with metadata filtering. This grounds every generated lesson in real documentation, providing "citations" for every AI claim.

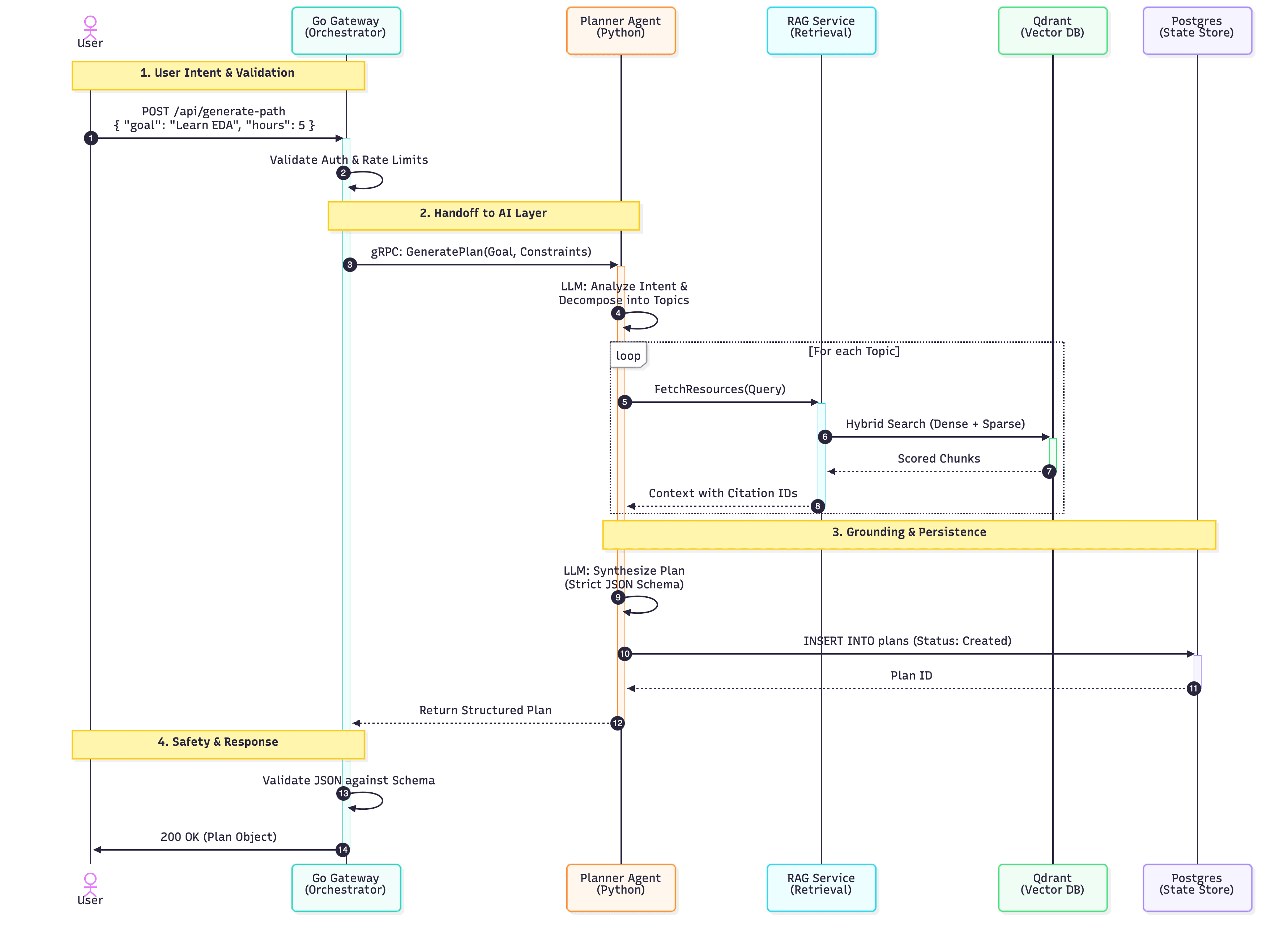

The User Journey (Agent Workflow)

The following sequence diagram illustrates how the system enforces determinism and grounding throughout the user's request:

1. Intent Analysis & Planning

The user sets a goal (e.g., "Master Event-Driven Systems"). The Planner Agent analyzes the time budget and prerequisites, generating a high-level DAG (Directed Acyclic Graph) of topics to cover.

2. Semantic Retrieval (RAG)

For each proposed topic, the Retrieval Agent queries the Qdrant index. It uses hybrid search to fetch the most relevant technical documentation, filtering out low-quality or irrelevant matches.

3. Grounded Content Generation

The Quiz & Lesson Agents synthesize the retrieved context into structured lesson plans. Every quiz question is linked to a specific source ID, ensuring the content is explainable and verifiable.

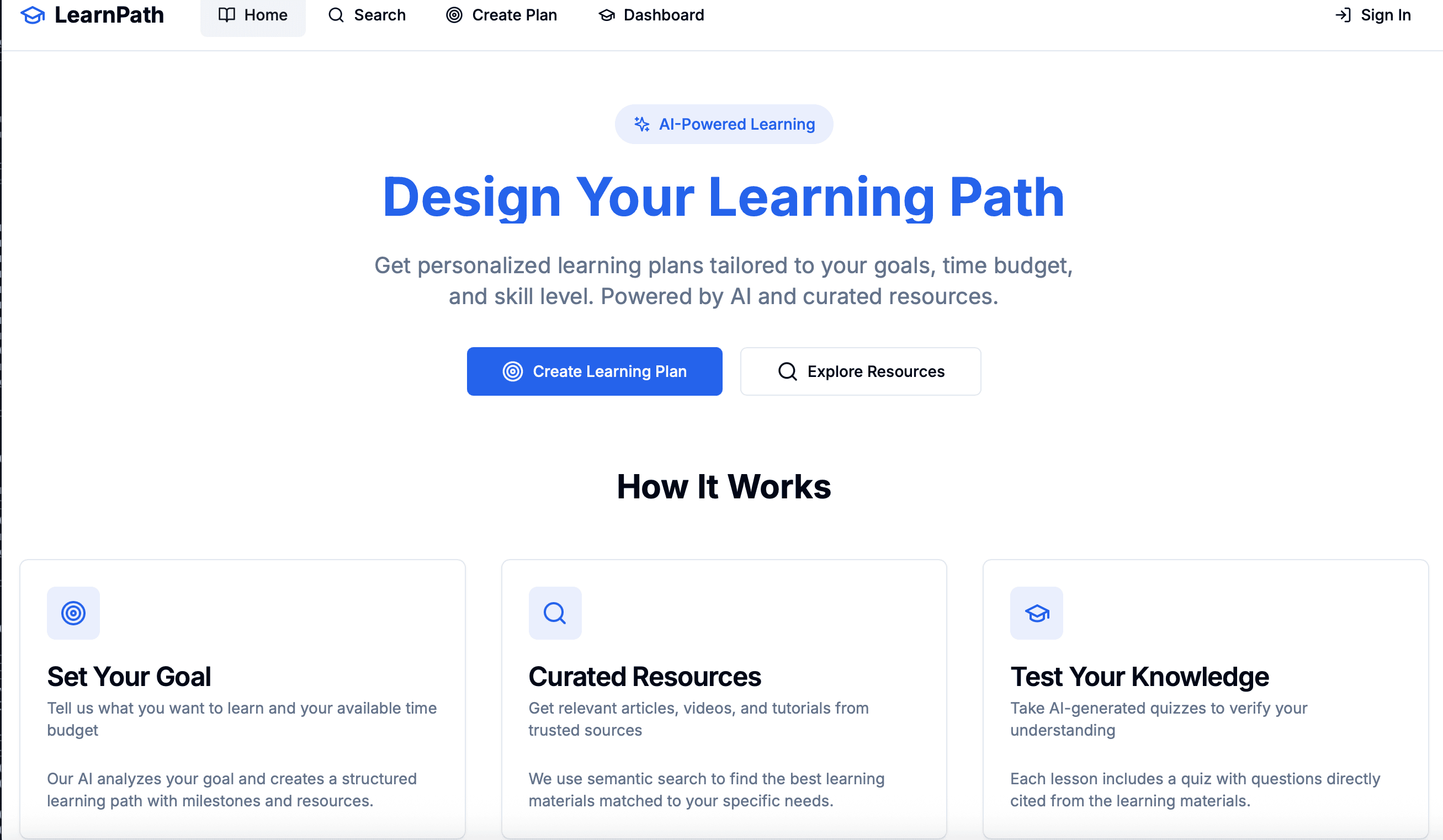

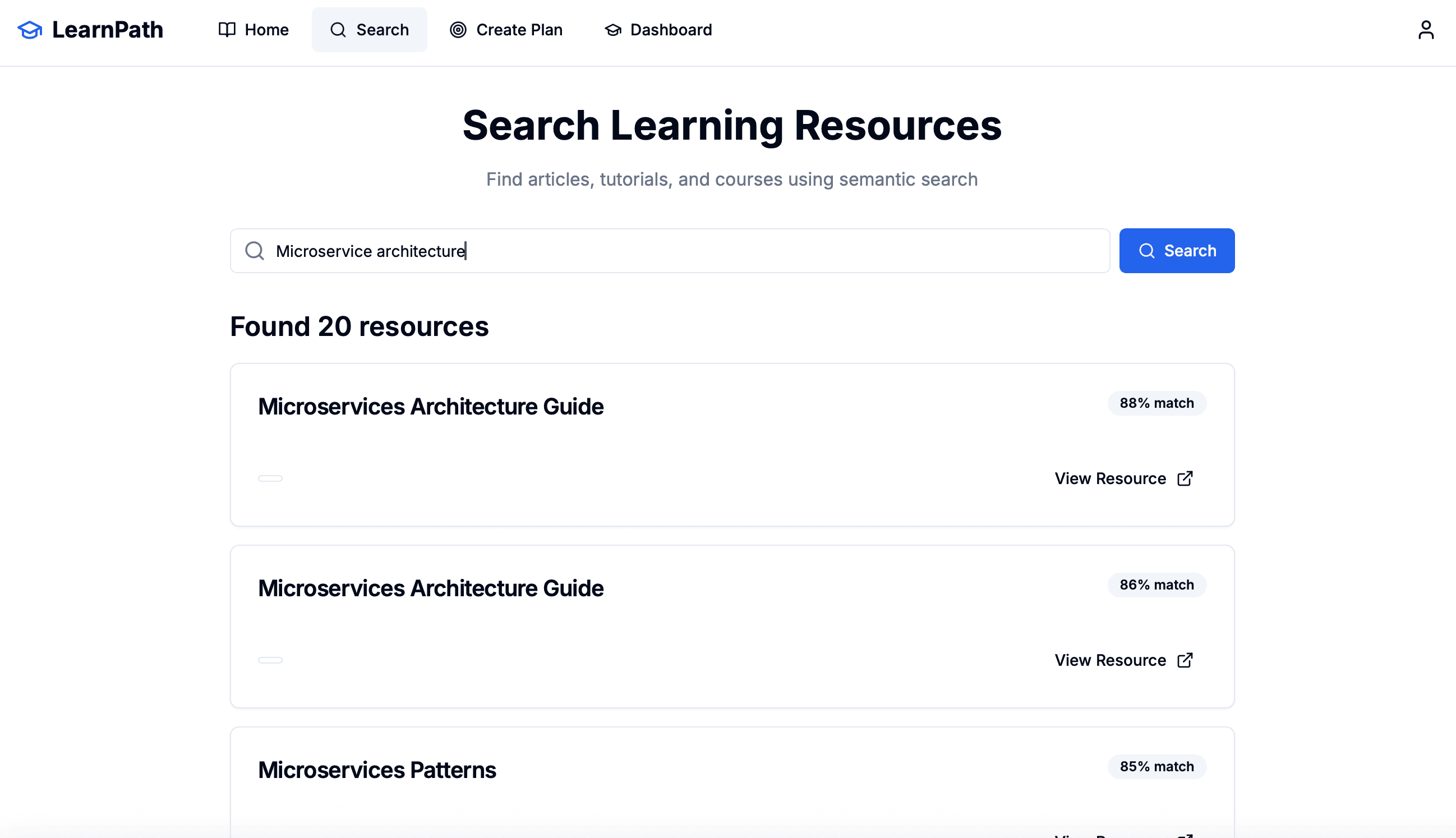

Resource Discovery Engine

This screen exposes the underlying RAG capabilities. It demonstrates how the system retrieves "ground truth" data before feeding it to the LLM. By using Qdrant's vector similarity, we ensure the agent has the right context window to generate accurate plans.

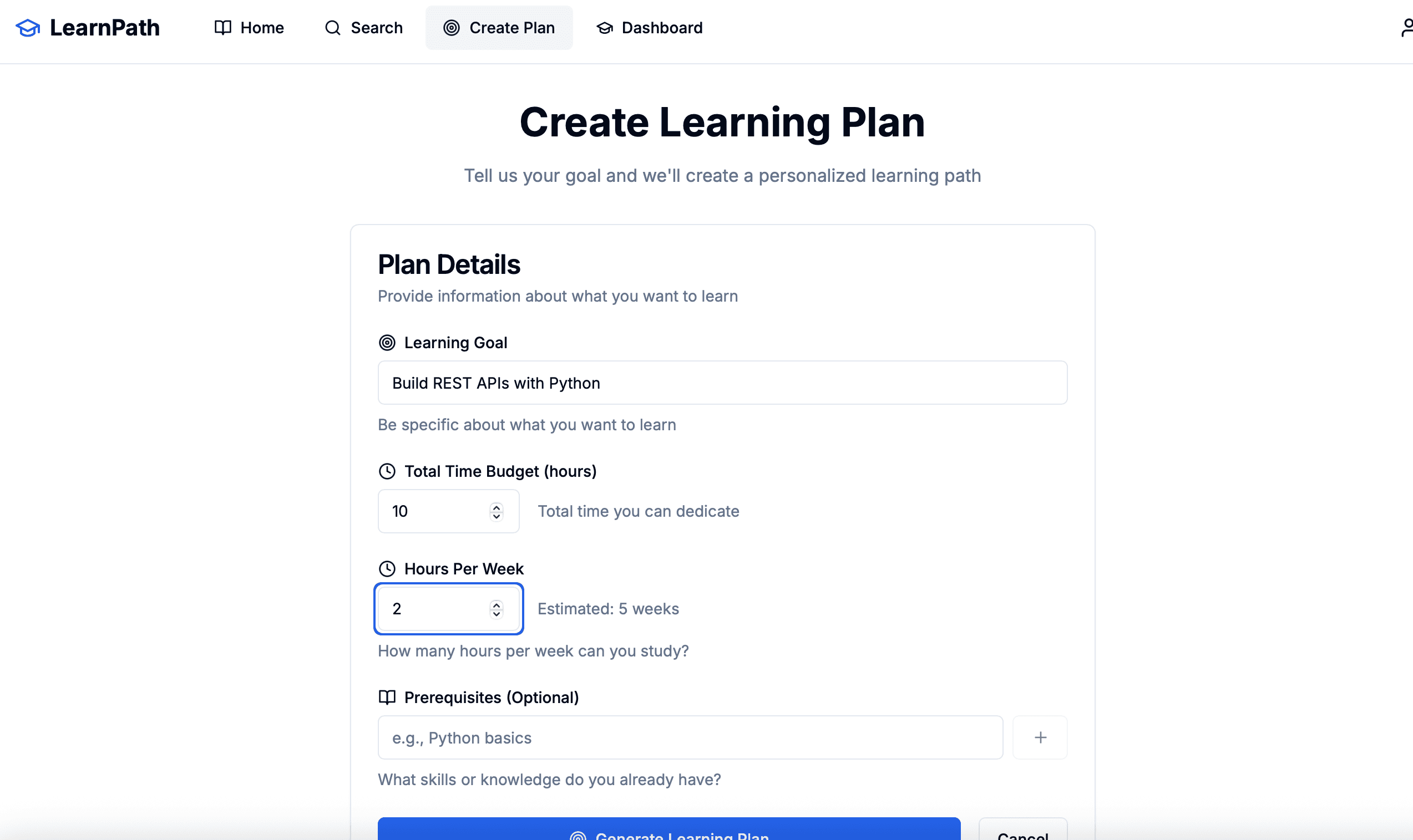

Structured Planning Interface

This form submits the "System Constraints" to the Planner. Unlike a chat bot, this follows a deterministic input-output contract, ensuring the generated schedule fits the user's exact mathematical constraints (e.g., 4 weeks @ 5 hours/week).

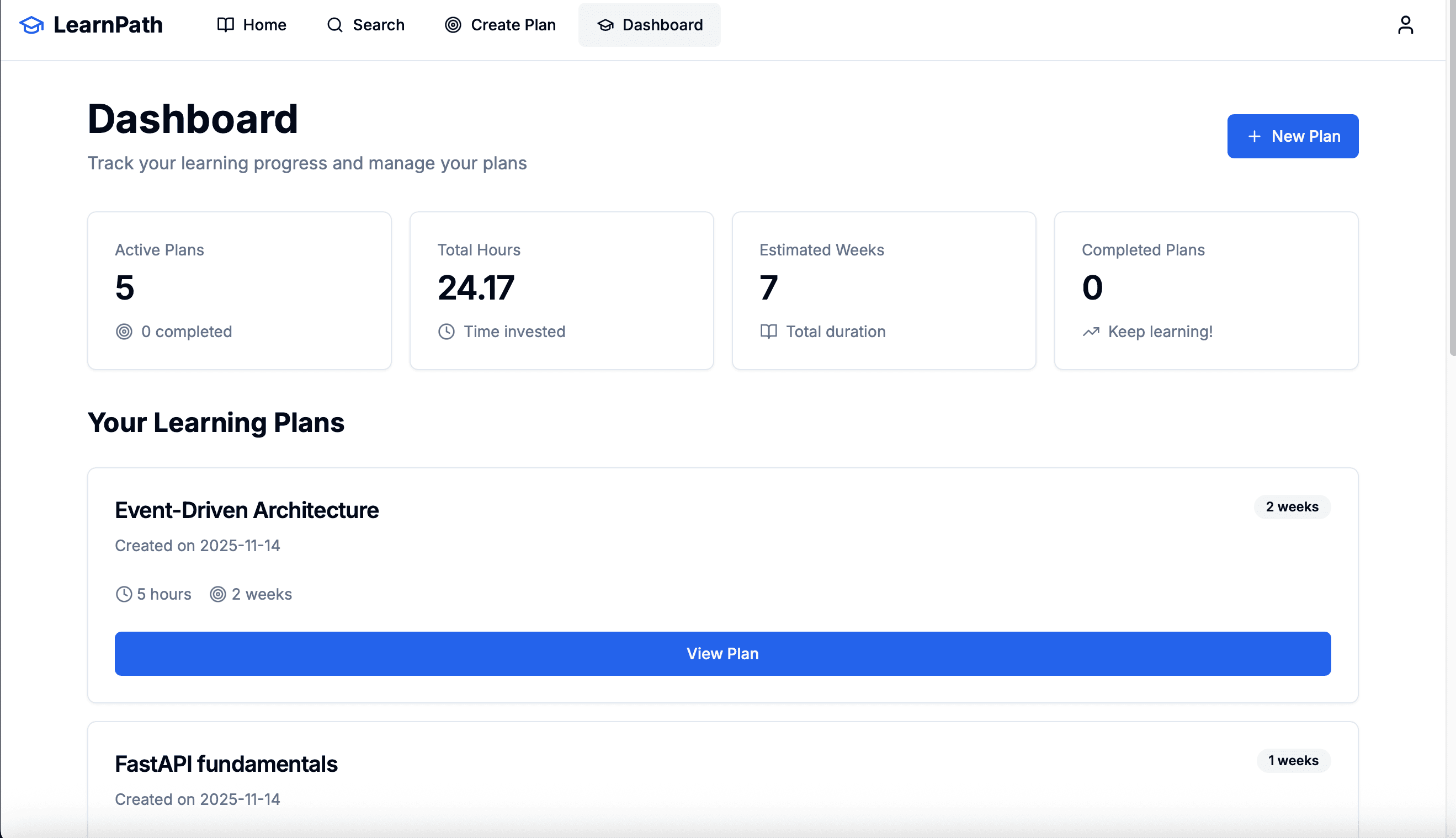

Observability & Progress

The dashboard reflects the persistence layer. Because the "Plan" is stored as a structured relational object in Postgres (not just a chat log), users can track granular progress, pause, and resume their learning paths indefinitely.

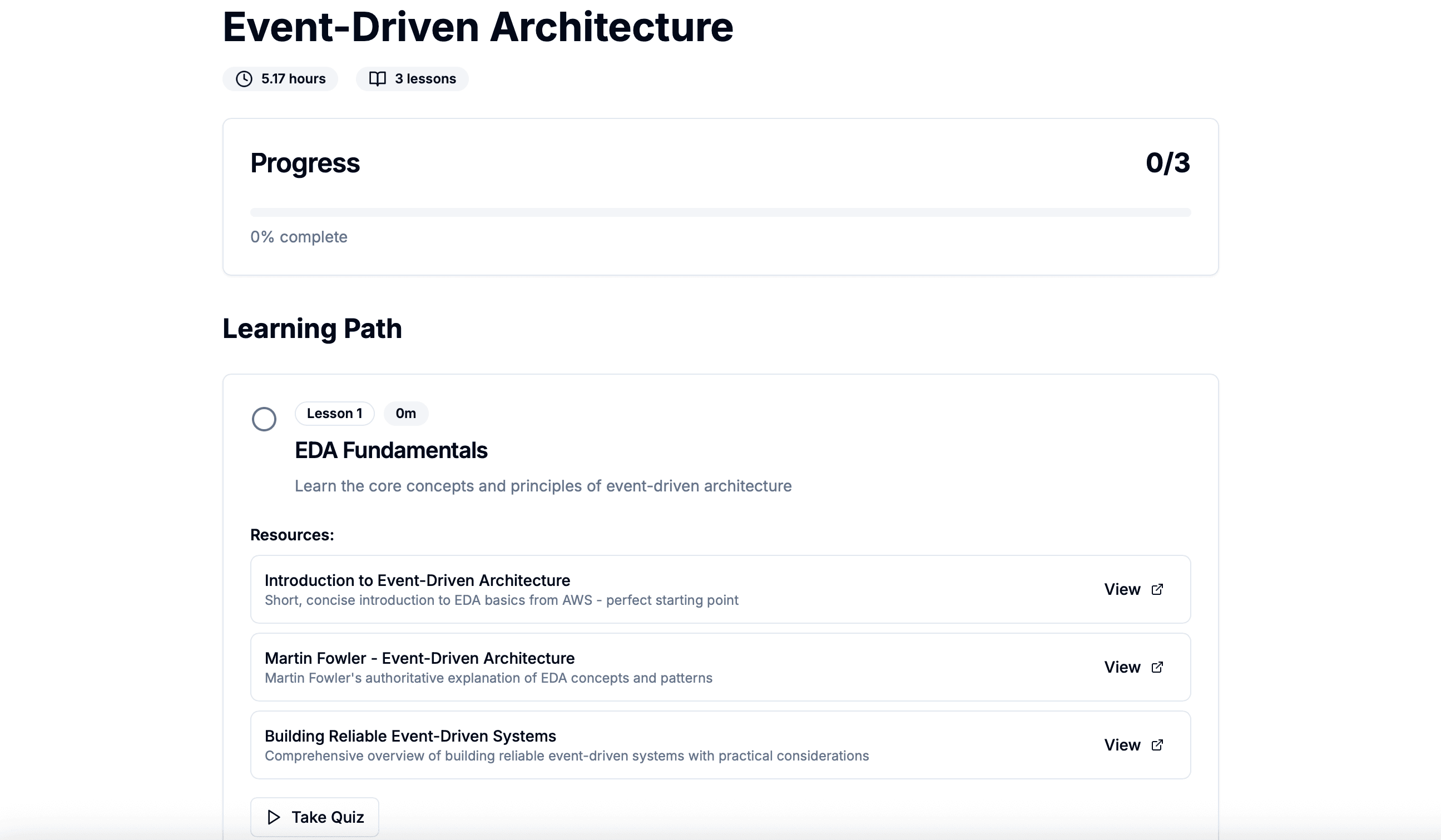

Grounded Lessons & Citations

This view validates the "Reference Architecture" claim: the AI doesn't just talk; it cites. Every resource listed here was retrieved via the RAG pipeline and verified by the LLM as relevant to the specific lesson topic.

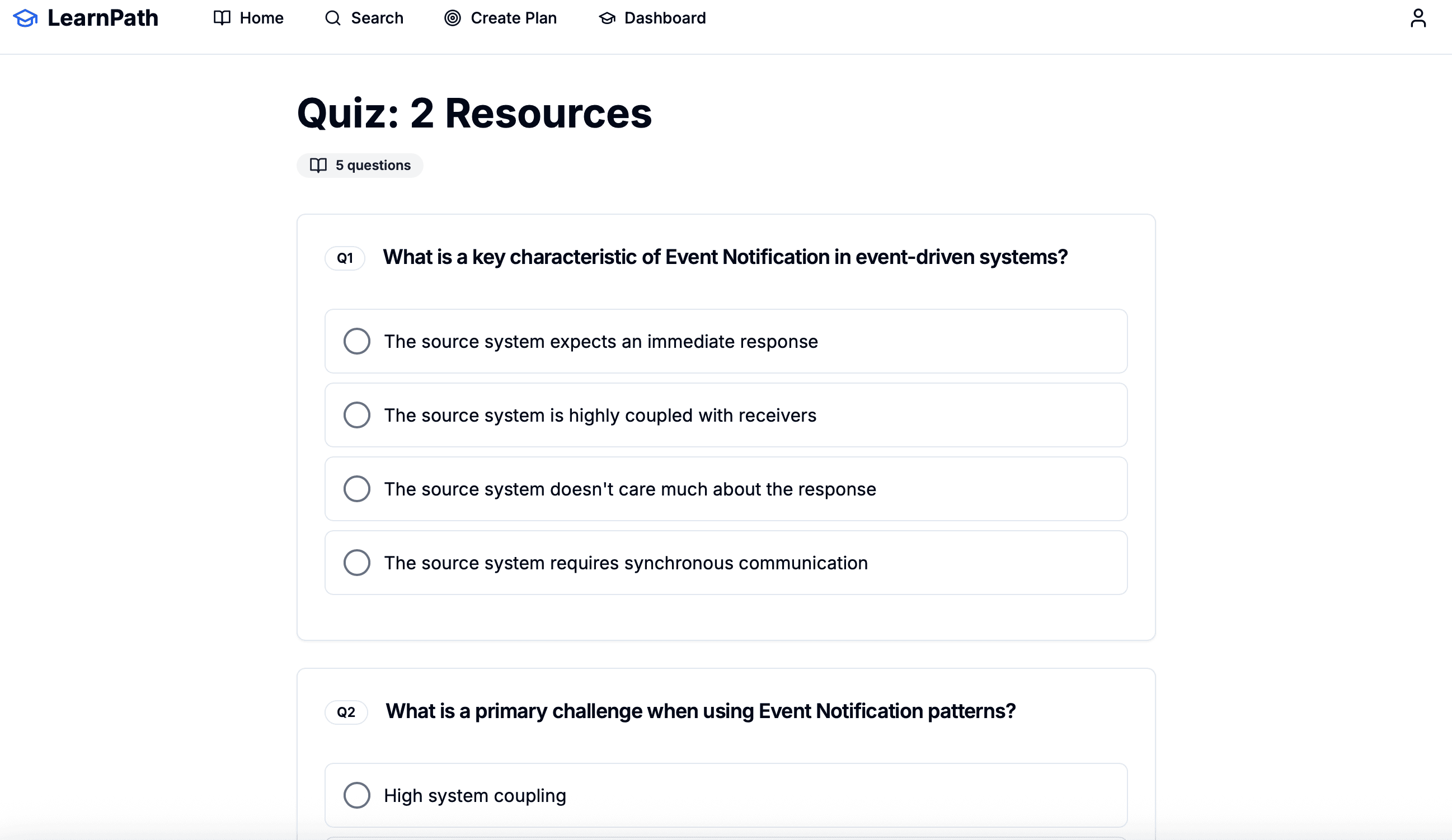

Verified Assessment Logic

To solve the hallucination problem, the Quiz Agent is instructed to generate questions only based on the provided context chunks. If the RAG retrieval finds no data, the agent refuses to generate a question rather than inventing facts.

Engineering Decisions (Why this Stack?)

Go for Orchestration: I chose Go for the API Gateway to handle concurrent requests and define strict service contracts. It acts as the "adult in the room," ensuring that if Python services hang or fail, the system recovers gracefully.

Python for AI Logic: Python remains the king of ML libraries. By isolating it in microservices, we get the best of both worlds: the vast ecosystem of LangChain/LlamaIndex and the operational stability of Go.

Qdrant for Production RAG: Chosen over simple pgvector for its native support for payload filtering and high-performance Rust core, essential for filtering resources by "skill level" or "content type" in real-time.